- #Sitesucker facebook how to#

- #Sitesucker facebook archive#

- #Sitesucker facebook full#

- #Sitesucker facebook pro#

- #Sitesucker facebook software#

When it comes to downloading a website, there are many website downloaders available for you to use. One thing you need to know about downloading a website is that the local copy can age quickly as updates to the websites are not rolled to the downloaded copy. There are many reasons while you will want to have a website accessible locally, but the most popular reasons include being able to access it locally and save network fee upfront or even get it delivered to someone that does not have access to the Internet frequently. If you have coding skills, you can develop one yourself as it is not difficult – all you require is the skill of sending HTTP requests, file handling, and exception handling.

#Sitesucker facebook how to#

How to Build a Web Crawler with Python?.Building a Web Crawler Using Selenium and Proxies.And since then, the whole idea of downloading a website fascinated me.

#Sitesucker facebook full#

My first encounter with a website downloader was when I got the full W3schools programming tutorials sent to me by a friend.

#Sitesucker facebook software#

In this article, we will reveal to you some of the best website downloaders in the market that you can use for downloading websites.Ī website downloaded is a computer program either in the form of a simple script or a full-fledged installable software designed to make a website locally accessible on a computer memory by downloading its pages and saving them.Īt its most basic level, a website downloader works like a crawler – it sends requests for a webpage, save it locally, and then scrap internal URLs from the page and add it to a list of URLs to be crawled – and the process continues until no new internal URL is found. However, with the help of a website downloader, you can have a full website downloaded and saved locally for you in minutes. Websites are designed to be accessible online, and if you want to access a local copy, you will have to save for offline reading for each page of the website, which can be time-wasting, repetitive, and error-prone. One of such is being able to download a website and have a local copy you can access anytime, even without a network connection. Technology has advanced that some things we have not even think of have not been made available.

#Sitesucker facebook archive#

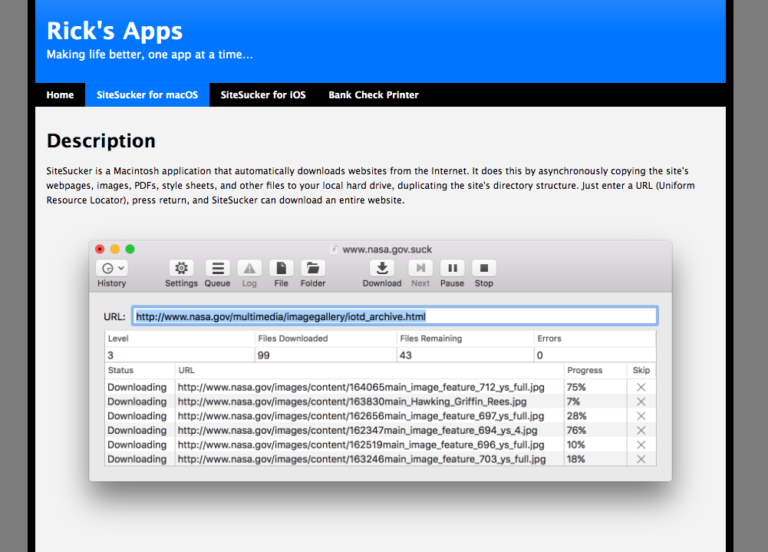

Something like SiteSucker makes a lot more sense than cloning a site for helping folks archive their work so that it can be accessible for the long term, and building that feature into Reclaim Hosting’s services would be pretty cool.Are you looking for the best website downloader for converting a website into an offline document? Then you are on the right page, as the article below will be used for discussing some of the best website downloaders in the market.

All those database driven sites need to be updated, maintained, and protected from hackers and spam. One option is cloning a site in Installatron on Reclaim Hosting, but that requires a dynamic database for a static copy, why not just suck that site? And while cloning a site using Installatron is cheaper and easier given it’s built into Reclaim offerings, it’s not all that sustainable for us or them.

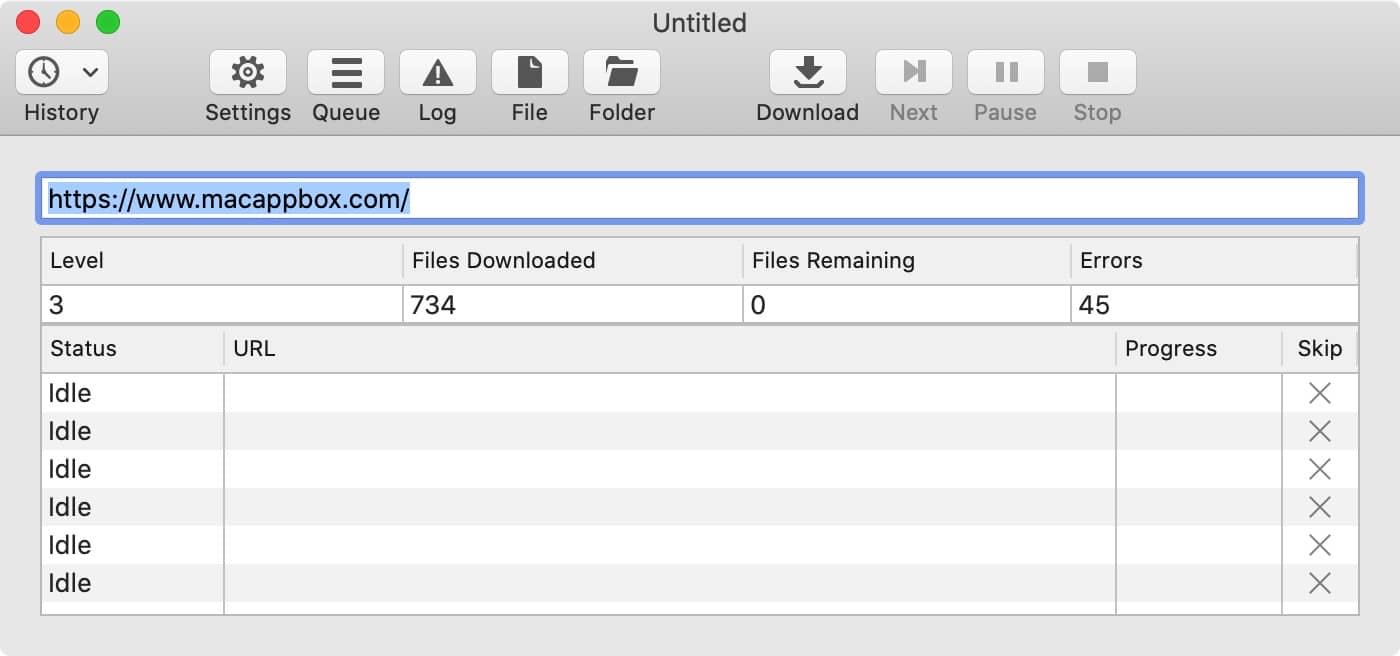

And to reinforce that point, right after I finished sucking this site, a faculty member submitted a support ticket asking the best way to archive a specific moment of a site so that they could compare it with future iterations. I can see more than a few uses for my own sites, not to mention the many others I help support. I don’t pay for that many applications, but this is one that was very much worth the $5 for me. Fixed a bug that could cause SiteSucker to crash if it needs to ask the user for permission to open a file. Version 2.7.2: Fixed a bug that could cause SiteSucker to crash on OS X 10.9.x Mavericks. SiteSucker can download files unmodified, or it can “localize” the files it downloads, allowing you to browse a site off-line.

#Sitesucker facebook pro#

SiteSucker Pro is an enhanced version of SiteSucker that can download. It does this by asynchronously copying the site's webpages, images, PDFs, style sheets, and other files to your local hard drive, duplicating the site's directory structure. SiteSucker Pro 4.0.1 Multilingual macOS 6 mb SiteSucker is an Macintosh application that automatically downloads Web sites from the Internet. WGET is a piece of free software from GNU designed to retrieve files using the most popular inter. WGET latest version: Retrieve files using popular internet protocols for free. HTTrack WebSite Copier allows you to download a web site from the Internet to a local directory, building recursively all directories, getting HTML, images, and other files from the server to your computer.

0 kommentar(er)

0 kommentar(er)